Posted by willcritchlow

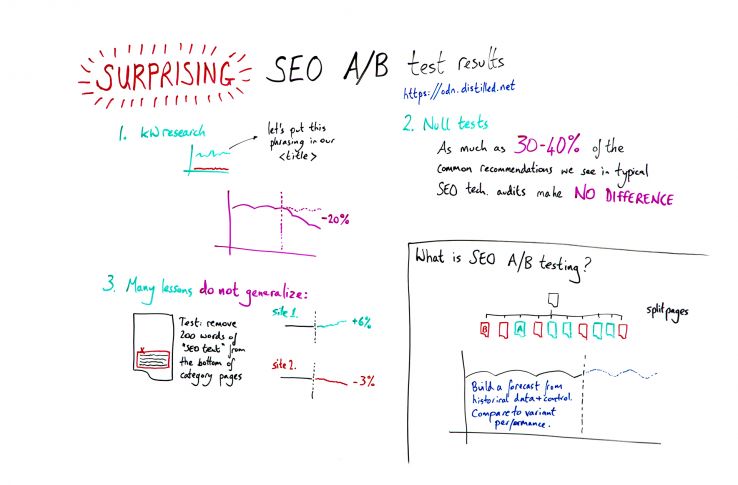

You can make all the tweaks and changes in the world, but how do you know they’re the best choice for the site you’re working on? Without data to support your hypotheses, it’s hard to say. In this week’s edition of Whiteboard Friday, Will Critchlow explains a bit about what A/B testing for SEO entails and describes some of the surprising results he’s seen that prove you can’t always trust your instinct in our industry.

Click on the whiteboard image above to open a high-resolution version in a new tab!

Video Transcription

Hi, everyone. Welcome to another British Whiteboard Friday. My name is Will Critchlow. I’m the founder and CEO at Distilled. At Distilled, one of the things that we’ve been working on recently is building an SEO A/B testing platform. It’s called the ODN, the Optimization Delivery Network. We’re now deployed on a bunch of big sites, and we’ve been running these SEO A/B tests for a little while. I want to tell you about some of the surprising results that we’ve seen.

What is SEO A/B testing?

We’re going to link to some resources that will show you more about what SEO A/B testing is. But very quickly, the general principle is that you take a site section, so a bunch of pages that have a similar structure and layout and template and so forth, and you split those pages into control and variant, so a group of A pages and a group of B pages.

Then you make the change that you’re hypothesizing is going to make a difference just to one of those groups of pages, and you leave the other set unchanged. Then, using your analytics data, you build a forecast of what would have happened to the variant pages if you hadn’t made any changes to them, and you compare what actually happens to the forecast. Out of that you get some statistical confidence intervals, and you get to say, yes, this is an uplift, or there was no difference, or no, this hurt the performance of your site.

This is data that we’ve never really had in SEO before, because this is very different to running a controlled experiment in a kind of lab environment or on a test domain. This is in the wild, on real, actual, live websites. So let’s get to the material. The first surprising result I want to talk about is based off some of the most basic advice that you’ve ever seen.

Result #1: Targeting higher-volume keywords can actually result in traffic drops

I’ve stood on stage and given this advice. I have recommended this stuff to clients. Probably you have too. You know that process where you do some keyword research and you find that there’s one particular way of searching for whatever it is that you offer that has more search volume than the way that you’re talking about it on your website right now, so higher search volume for a particular way of phrasing?

You make the recommendation, “Let’s talk about this stuff on our website the way that people are searching for it. Let’s put this kind of phrasing in our title and elsewhere on our pages.” I’ve made those recommendations. You’ve probably made those recommendations. They don’t always work. We’ve seen a few times now actually of testing this kind of process and seeing what are actually dramatic drops.

We saw up to 20-plus-percent drops in organic traffic after updating meta information in titles and so forth to target the more commonly-searched-for variant. Various different reasons for this. Maybe you end up with a worse click-through rate from the search results. So maybe you rank where you used to, but get a worse click-through rate. Maybe you improve your ranking for the higher volume target term and you move up a little bit, but you move down for the other one and the new one is more competitive.

So yes, you’ve moved up a little bit, but you’re still out of the running, and so it’s a net loss. Or maybe you end up ranking for fewer variations of key phrases on these pages. However it happens, you can’t be certain that just putting the higher-volume keyword phrasing on your pages is going to perform better. So that’s surprising result number one. Surprising result number two is possibly not that surprising, but pretty important I think.

Result #2: 30–40% of common tech audit recommendations make no difference

So this is that we see as many as 30% or 40% of the common recommendations in a classic tech audit make no difference. You do all of this work auditing the website. You follow SEO best practices. You find a thing that, in theory, makes the website better. You go and make the change. You test it.

Nothing, flatlines. You get the same performance as the forecast, as if you had made no change. This is a big deal because it’s making these kinds of recommendations that damages trust with engineers and product teams. You’re constantly asking them to do stuff. They feel like it’s pointless. They do all this stuff, and there’s no difference. That is what burns authority with engineering teams too often.

This is one of the reasons why we built the platform is that we can then take our 20 recommendations and hypotheses, test them all, find the 5 or 6 that move the needle, only go to the engineering team to build those ones, and that builds so much trust and relationship over time, and they get to work on stuff that moves the needle on the product side.

So the big deal there is really be a bit skeptical about some of this stuff. The best practices, at the limit, probably make a difference. If everything else is equal and you make that one tiny, little tweak to the alt attribute or a particular image somewhere deep on the page, if everything else had been equal, maybe that would have made the difference.

But is it going to move you up in a competitive ranking environment? That’s what we need to be skeptical about.

Result #3: Many lessons don’t generalize

So surprising result number three is: How many lessons do not generalize? We’ve seen this broadly across different sections on the same website, even different industries. Some of this is about the competitive dynamics of the industry.

Some of it is probably just the complexity of the ranking algorithm these days. But we see this in particular with things like this. Who’s seen SEO text on a category page? Those kind of you’ve got all of your products, and then somebody says, “You know what? We need 200 or 250 words that mention our key phrase a bunch of times down at the bottom of the page.” Sometimes, helpfully, your engineers will even put this in an SEO-text div for you.

So we see this pretty often, and we’ve tested removing it. We said, “You know what? No users are looking at this. We know that overstuffing the keyword on the page can be a negative ranking signal. I wonder if we’ll do better if we just cut that div.” So we remove it, and the first time we did it, plus 6% result. This was a good thing.

The pages are better without it. They’re now ranking better. We’re getting better performance. So we say, “You know what? We’ve learnt this lesson. You should remove this really low-quality text from the bottom of your category pages.” But then we tested it on another site, and we see there’s a drop, a small one admittedly, but it was helping on these particular pages.

So I think what that’s just telling us is we need to be testing these recommendations every time. We need to be trying to build testing into our core methodologies, and I think this trend is only going to increase and continue, because the more complex the ranking algorithms get, the more machine learning is baked into it and it’s not as deterministic as it used to be, and the more competitive the markets get, so the narrower the gap between you and your competitors, the less stable all this stuff is, the smaller differences there will be, and the bigger opportunity there will be for something that works in one place to be null or negative in another.

So I hope I have inspired you to check out some SEO A/B testing. We’re going to link to some of the resources that describe how you do it, how you can do it yourself, and how you can build a program around this as well as some other of our case studies and lessons that we’ve learnt. But I hope you enjoyed this journey on surprising results from SEO A/B tests.

Resources:

- SEO Split-Testing: How to A/B Test Changes for Google

- Do it Yourself SEO Split Testing Tool With Causal Impact

- Case studies:

Video transcription by Speechpad.com

Sign up for The Moz Top 10, a semimonthly mailer updating you on the top ten hottest pieces of SEO news, tips, and rad links uncovered by the Moz team. Think of it as your exclusive digest of stuff you don’t have time to hunt down but want to read!